AshBuilder

THE STORY BEHIND THE CODE: A DEEP DIVE

- Summary with focus on technical aspects

- App description

- Technical details

- Technology and services used

Summary with focus on technical aspects

Six months of high-paced startup experience supporting a production ML-ops application built with Next.js, tRPC, and TypeScript. Contributed to new feature development, wrote end-to-end tests using Cypress, and led major frontend and database performance optimizations, including refactoring efforts.

App description

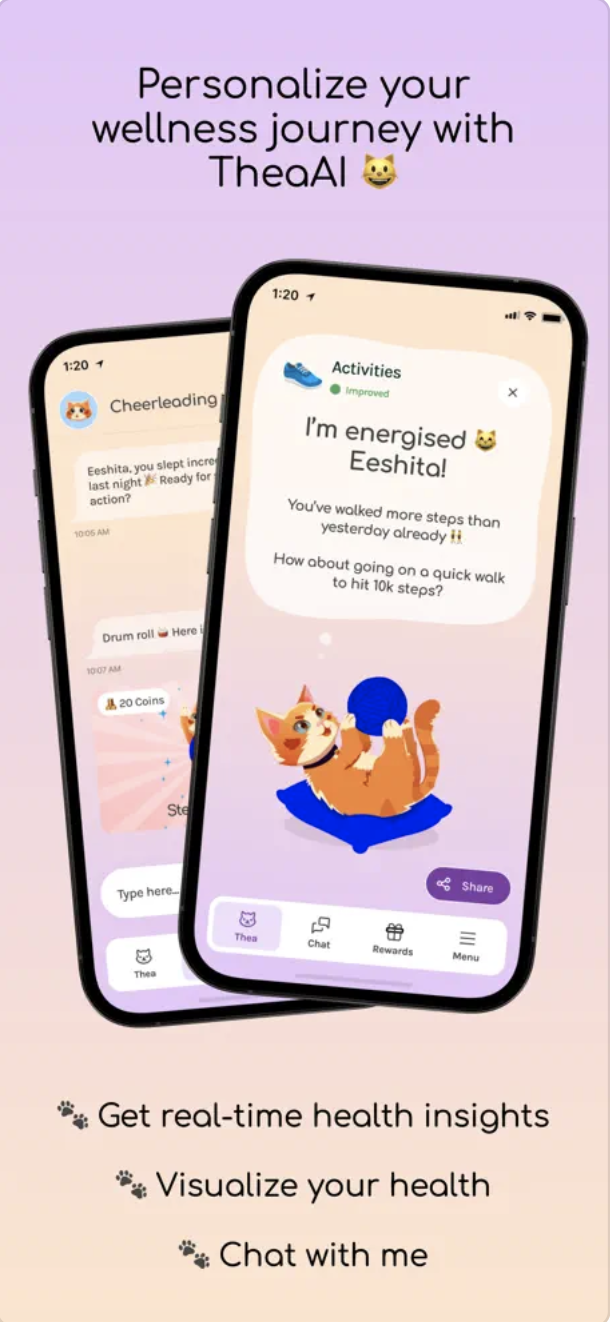

Slingshot is a well-funded startup tackling the mental health crisis, based on the insight that "there just aren't enough people or resources to help everyone in need." In January 2024, they began building a foundational AI model for psychology, resulting in Ash—an AI therapist mobile app.

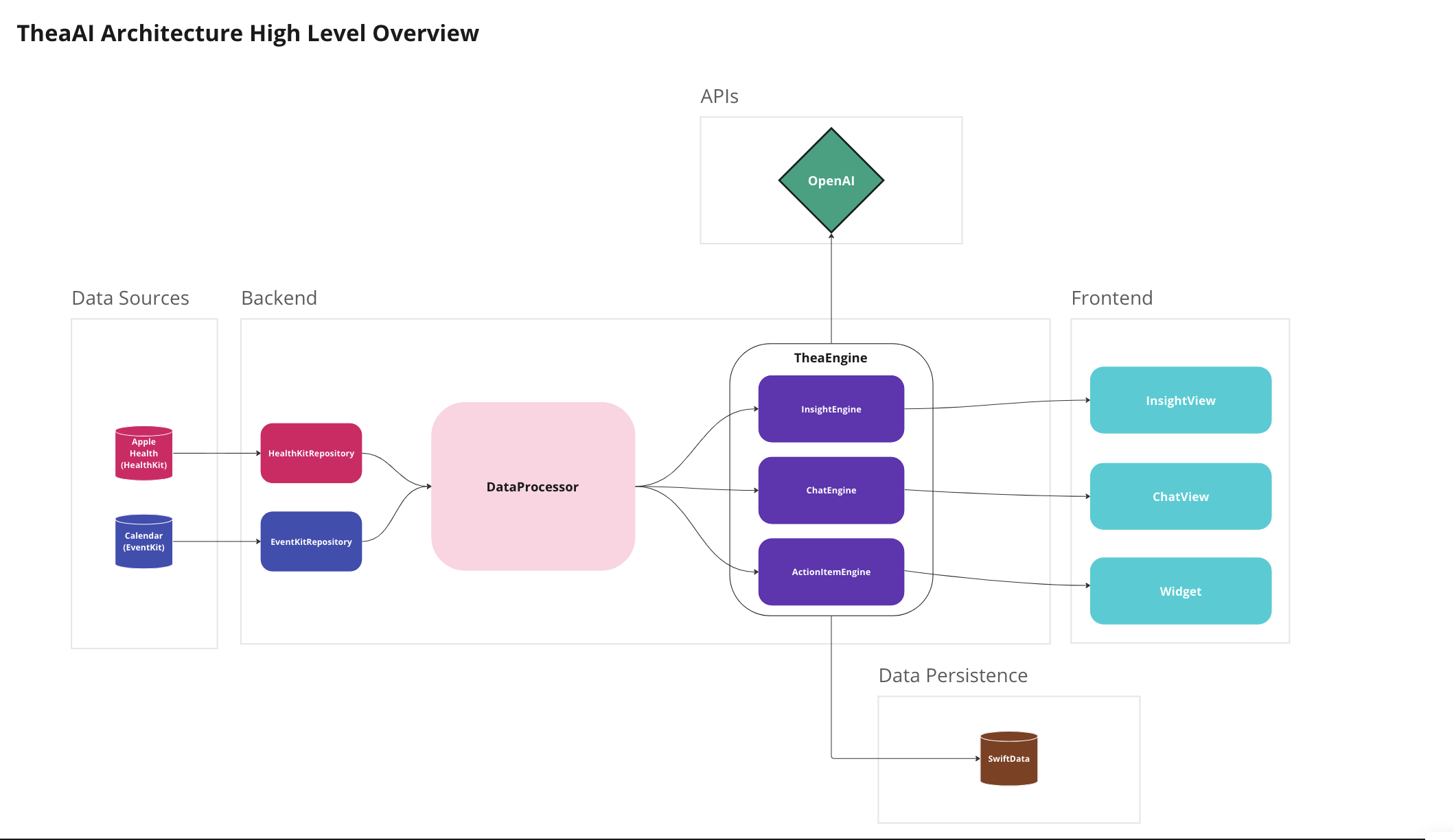

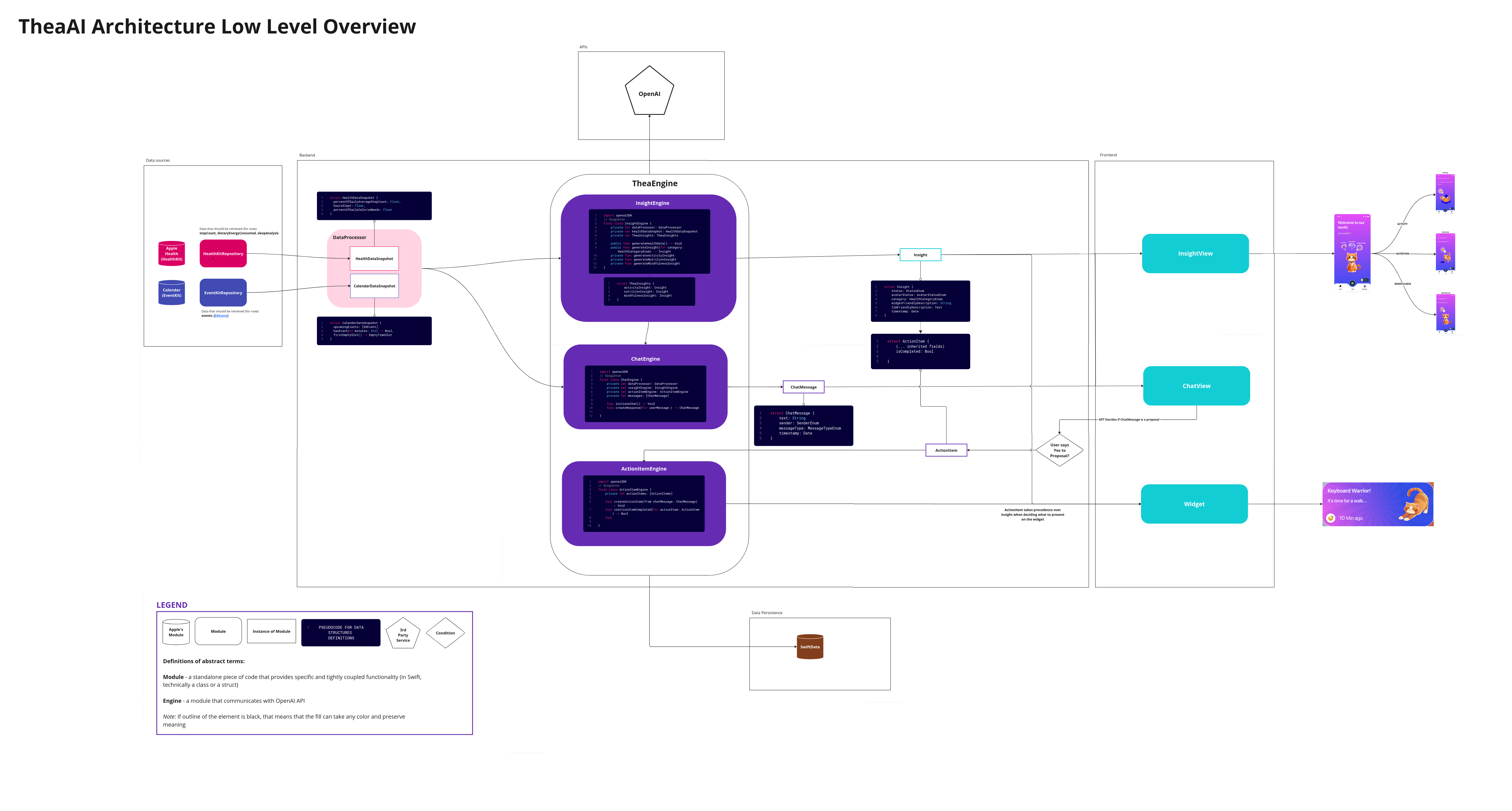

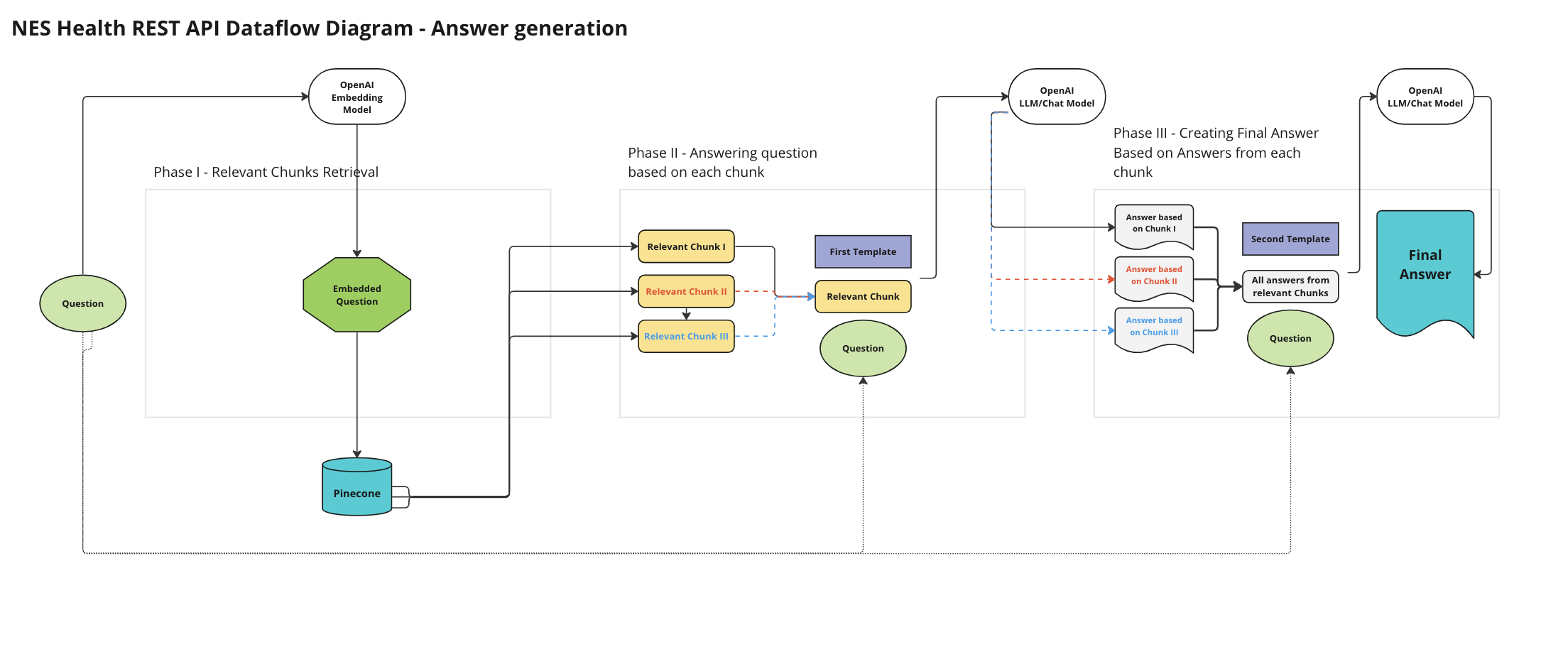

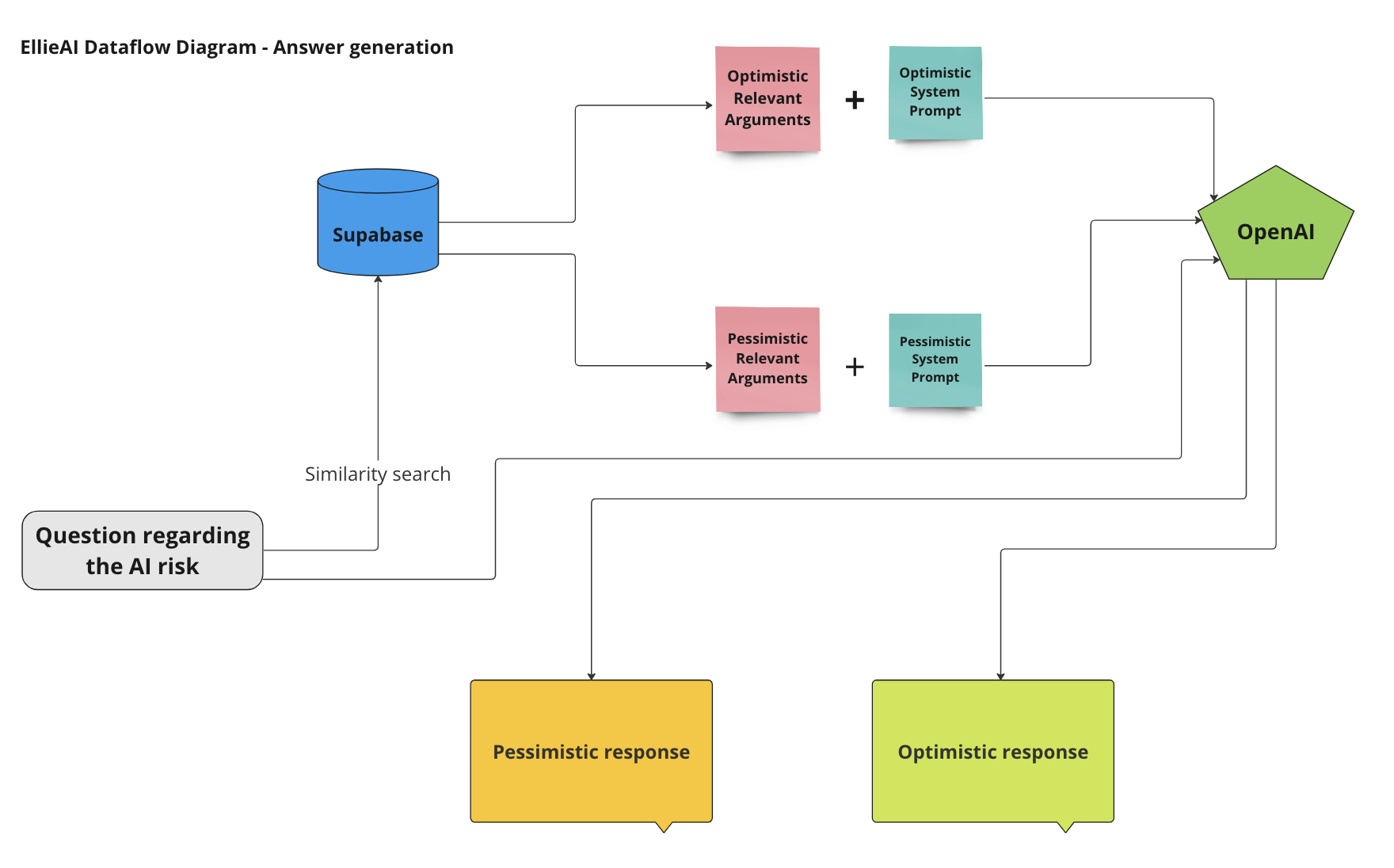

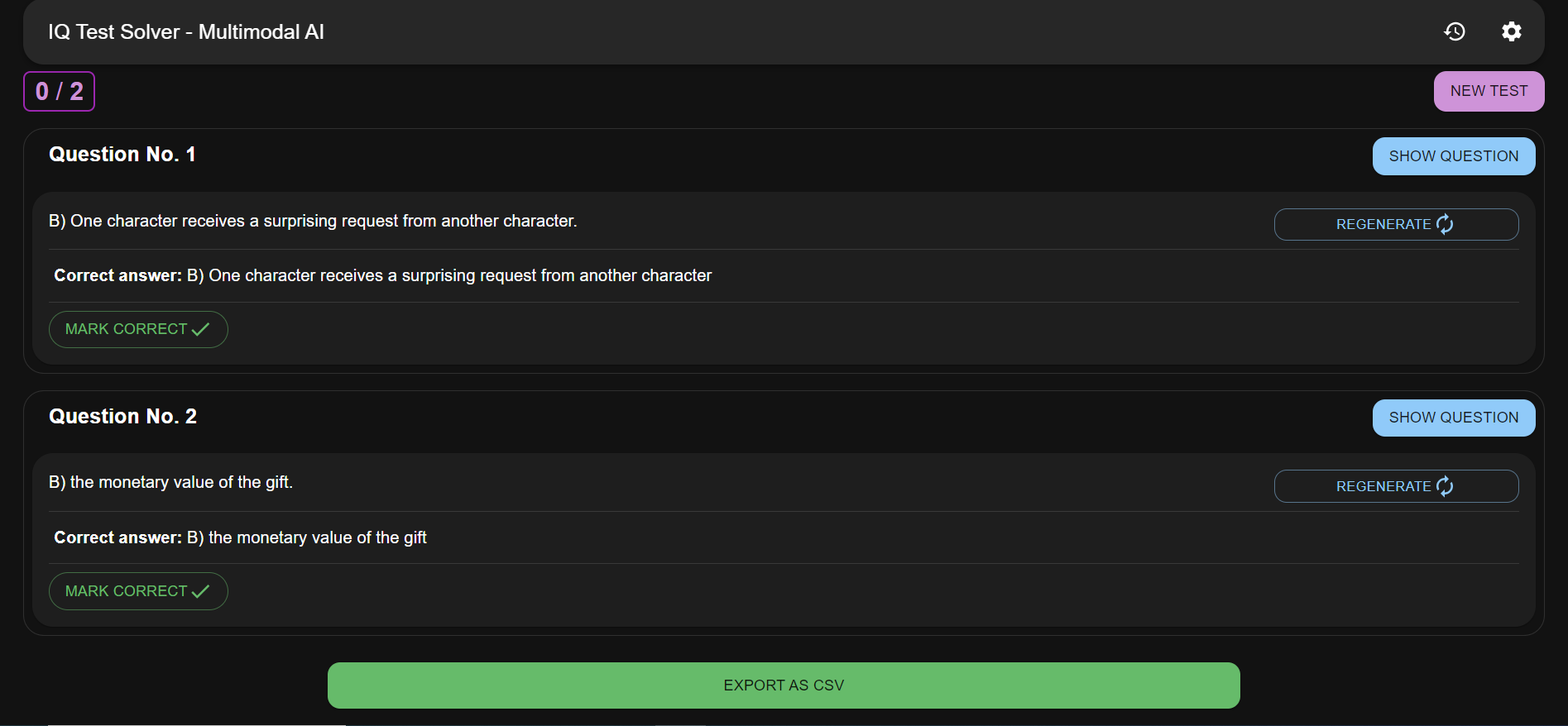

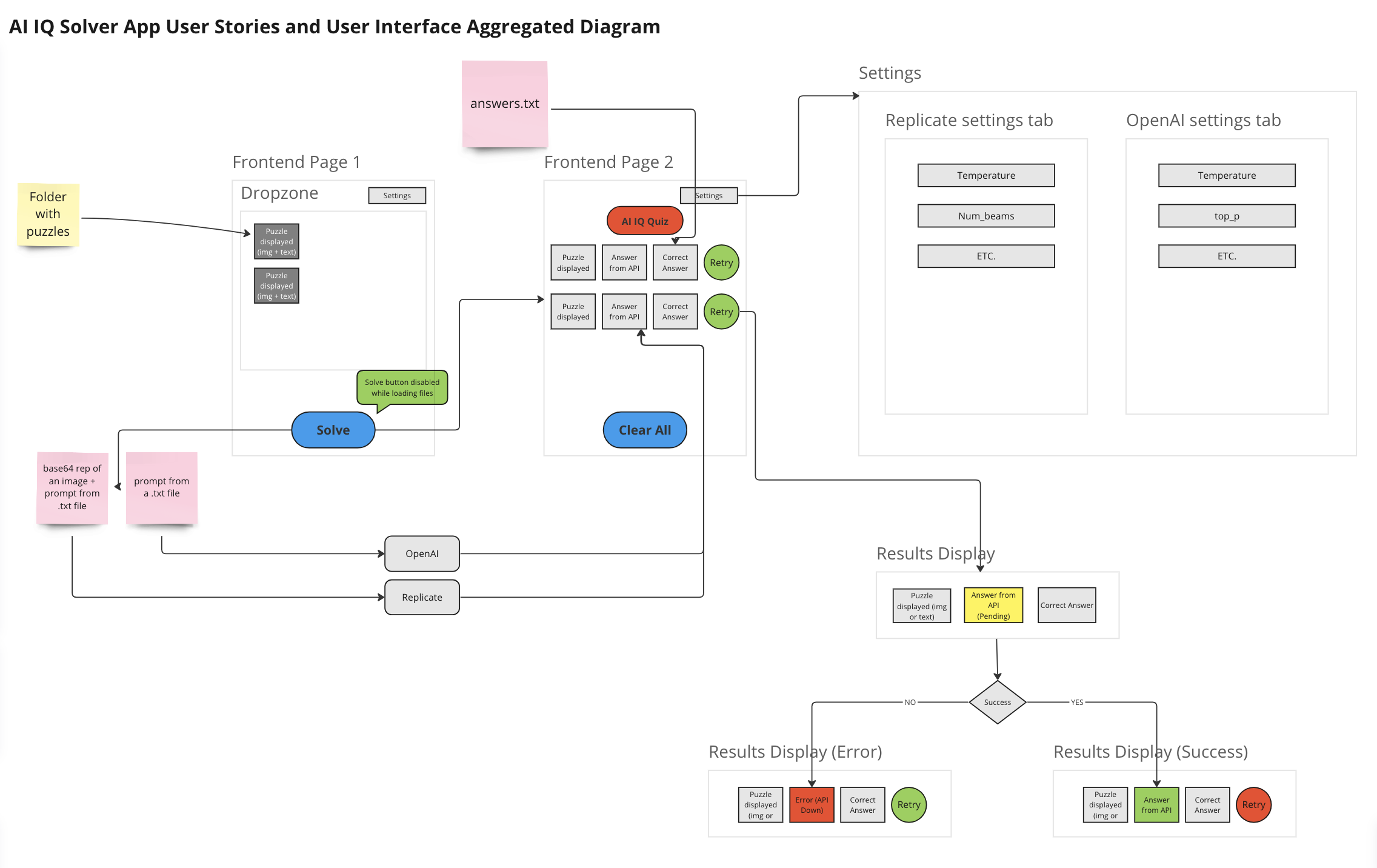

To support this, the team developed AshBuilder, a web app designed to centralize the creation and annotation of therapeutic dialogues by writers—either psychology experts or experienced writers studying psychological literature. These dialogues, along with annotations on model responses, were used to fine-tune internal AI models developed by the ML team. Writers could create conversations by typing, roleplaying (e.g., one as therapist, one as patient), or using speech-to-text features while recording themselves. They could also generate responses from a variety of state-of-the-art LLMs (e.g., GPT-4o by OpenAI, LLaMA or Mistral hosted on Groq, or Gemini by Google DeepMind), as well as internal models, to identify failure cases and contextual weaknesses. The system allowed dynamic integration of new LLMs and removal of unused ones.Another key feature was the ability to preview and annotate anonymized production conversations from the Ash chatbot, without storing this content in AshBuilder's database—respecting data separation and privacy policies.

The app included robust role-based user management. For example, certain writers could be granted access to create therapeutic dialogues end-to-end while being restricted from viewing sensitive AI-chat production conversations. Additional LLM-assisted tooling was introduced, such as: LLM-based prompt generation, helping writers test various prompts for generating better responses from the internal model. LLM-based Context generation, allowing users to quickly understand the full context behind a problematic AI response—potentially hundreds of messages into a conversation—without reading the entire thread.Technical details

Initially, before joining the efforts, the app was built using NextJS, React-Query, Supabase, and TypeScript, not fully adhering to clean code principles, as the initial goal was to have writers get to work as soon as possible.

The first challenge I got to work on was a full refactoring, introducing Zod and tRPC to maximize type-safety, which significantly reduced bugs when implementing new features or making changes. I also redesigned parts of the PostgreSQL (Supabase) schema to improve performance. For example, I changed the message storage strategy from one message per row to a single JSONL column due to inefficient querying patterns when retrieving full conversations—specifically, the need for JOIN operations over hundreds of rows per session, which introduced latency and complexity. Since the common usage pattern involved always reading entire conversations rather than individual messages, storing them as JSONL drastically improved performance. While there was a need to update individual messages, early experimentation showed this wasn't a bottleneck in practice (updating relatively large JSONL was fast), and the performance benefits of reading full conversations as a single document far outweighed the trade-offs. To better understand the writers' workflows while contributing to the codebase, I wrote end-to-end tests using Cypress and integrated them into the existing CI workflow with GitHub Actions. The tests ran against Vercel preview deployments, ensuring reliable functionality in every preview, before merging in.

Continuous sharpening of debugging skills, effective communication, and effective use of development tools were essential in this role, as any downtime or bugs could block writers during their most creative and cognitively demanding work—something we worked hard to avoid. As part of improving debugging skills, I learned to set up and efficiently use the VSCode debugger for full-stack NextJS debugging.

A key consideration was ensuring that writers never lost their work in progress. To address this, we heavily relied on local storage, allowing for seamless recovery of data in case of unexpected interruptions. One of the reasons we chose Jotai for global state management was its built-in support for a persistent state, making it an ideal fit for this requirement. This ensured that writers could continue their work without worrying about losing any unsaved progress.

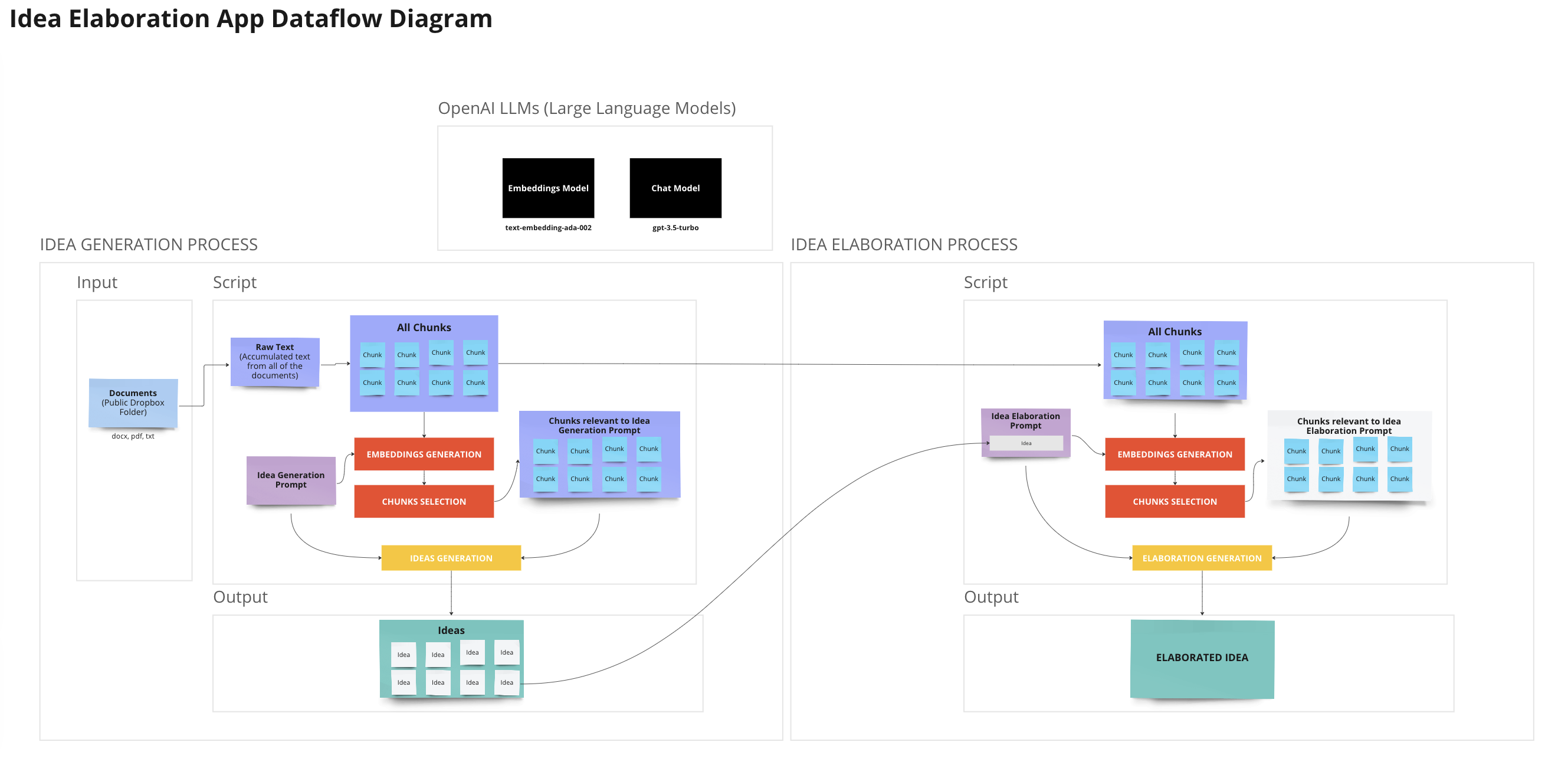

LLM-based context generation refers to the process of using a large language model (LLM) like GPT-4o to provide context around a specific AI response in a conversation. This is particularly useful when dealing with problematic responses that might be hundreds of messages into a conversation. Instead of manually reading through the entire thread, the system dynamically generates a concise context, helping users quickly understand the relevant background information leading up to the issue.

From a technical standpoint, we implemented this by using a hardcoded prompt that would extract the beginning portion of the conversation—up to the token limit of GPT-4o—ensuring that enough context was captured to inform the analysis. To handle the high-frequency demand for this context generation, we utilized Upstash-hosted Redis for caching. We generated unique hashed keys based on the content of the conversation messages, which allowed us to cache the context analysis and retrieve it efficiently. This caching ensured that as long as the message content didn't change, all writers would receive the same cached context, reducing redundant processing. To adhere to privacy policies, we encrypted the message content before storing it in Redis, ensuring that the data was only accessible through the internal service, maintaining confidentiality.

Given the importance of quick debugging, I gained extensive experience setting up and using Sentry to capture detailed insights during troubleshooting. For example, when writers experienced noticeable latencies while using LLMs, I implemented time-to-first-token tracking. This allowed us to pinpoint whether the latency was caused by the LLM providers, our system, or the writers' internet connection, enabling us to optimize the process more effectively.

As part of improving backend performance, particularly in anticipating bottlenecks as the volume of annotations and conversations grew, I gained hands-on experience with SQL query optimization and utilized query analysis tools to identify inefficiencies. This allowed us to fine-tune queries and ensure the system could scale effectively as data increased.

Due to the fast-paced nature of the work, I learned the importance of avoiding premature optimizations and focusing on performance improvements only when necessary. For instance, when writers began experiencing lags that hindered their productivity, I implemented lazy loading and virtualization to address the issue, ensuring a smoother and more efficient environment for them to deliver business value.

Technology and services used

- TypeScript

- NextJS

- React-Query

- tRPC

- Supabase

- OpenAI

- Anthropic SDK

- Vercel AI SDK

- Redis

- Linear

- Vercel

- Sentry

- TailwindCSS

- Lodash